Scraping 101 Part 2

Scrape

As promised, now we will scrape gu.se. Our objective is to look at the gender distribution for various academic titles at the JMG institution. The way we plan on doing this is by scraping the institution page. In order to reach our goal we need to:

- Find the page that lists all staff

- Figure out how to reach the interesting data

- In some way figure out the gender programmatically from the first names

- Collect the data

- Make some simple presentation

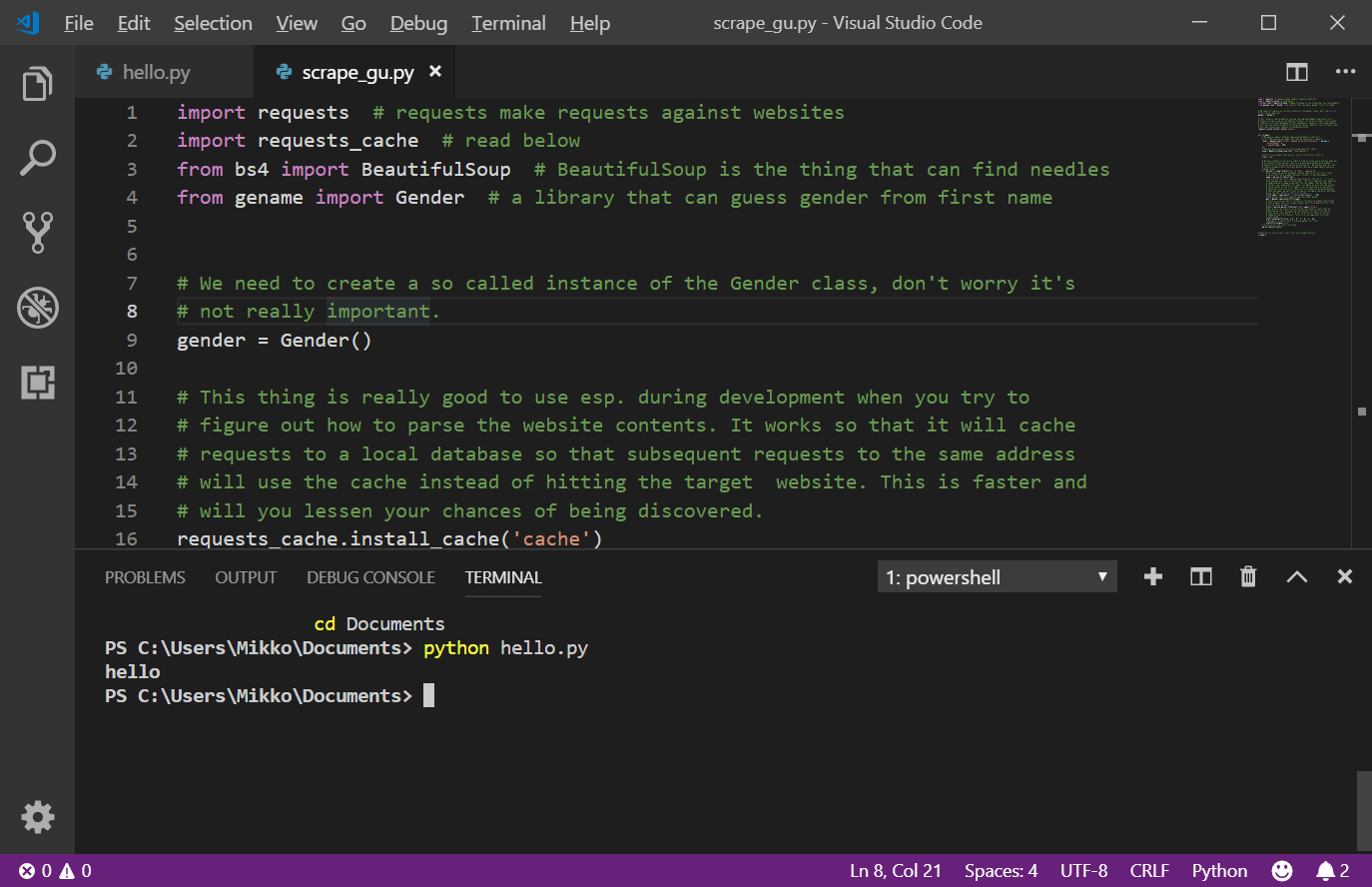

We will not go through the details of this program now even though it will not hurt you to try to read the code and comments below. Ok, now create a new file in your editor and copy paste the following code:

import requests # requests make requests against websites

import requests_cache # read below

from bs4 import BeautifulSoup # BeautifulSoup is the thing that can find needles

from gename import Gender # a library that can guess gender from first name

# We need to create a so called instance of the Gender class, don't worry it's

# not really important.

gender = Gender()

# This thing is really good to use esp. during development when you try to

# figure out how to parse the website contents. It works so that it will cache

# requests to a local database so that subsequent requests to the same address

# will use the cache instead of hitting the target website. This is faster and

# will you lessen your chances of being discovered.

requests_cache.install_cache('cache')

def scrape():

# We make a request towards jmg using parameters that will

# list 500 persons per page (that way we need only 1 request)

resp = requests.get('https://jmg.gu.se/om-institutionen/', params={

'selectedTab': 2,

'itemsPerPage': 500,

})

# Now we need to parse this junk using beautiful soup

soup = BeautifulSoup(resp.text, 'html.parser')

# This is a variable that we will use to collect our stats in.

stats = {}

# We have looked in the source code to find out that every persons name has

# a link with class person, this is a pretty good target as a unique

# identifier of the entity we are interested in.

all_persons = soup.find_all('a', {'class': 'person'})

# We iterate over all the found a elements with the class person that we

# already identified as a good target.

for person in all_persons:

# we found the persons a element, now we need to extract the string

# value that the a element defines.

name = person.string

# To extract the first name we need to first split the string on commma

# then we use the second part [1], which is the first name, just look on

# the webpage to see this. Then we end up with a first name that has a

# space character at the begining and also it might for all we know also

# have some ending space character, therefore we use strip() to remove

# these unwanted white space characters.

first_name = name.split(',')[1].strip()

# Now we use the first name to speculate what gender

gen = gender.speculate(first_name)

# Title is a value that is located in the next td element and inside

# that element there is a span element with the property title, this

# is the value we want.

title = person.find_next('td').span['title']

# We collect the stats in a so called "dictionary" stats that we

# defined earlier. Here we just set defualt for the title key so

# that every title has M, F, U set to 0 only if there is no key

# named as the title before. This is not so important to fully

# understand :)

stats.setdefault(title, {'M': 0, 'F': 0, 'U': 0})

# We add 1 to the current title and gender for stats

stats[title][gen] += 1

# Print the stats collected

print(stats)

# When we run the script it will call the scrape function.

scrape()Save the file as scrape_gu.py and you should have something like this:

Run it!

Let’s just try to run this thing and see what happens by typing

python scrape_gu.py in the terminal. You should find that did not work out

so well, there will be an error something like:

Traceback (most recent call last):

File "scrape_gu.py", line 1, in <module>

import requests # requests make requests against websites

ModuleNotFoundError: No module named 'requests'This says that we are missing some module called requests, in fact we are missing

some more modules. Modules are ready made programs that we will use in our

program. You can look at the top of the code of our program to see what modules

that we are importing: requests, requests_cache, bs4, gename. All of

these are imports of programs that are not directly available to us until we

install them, that is they do not come with the installation of Python.

Install some more

So we need to install these modules, modules typically contain programs but may

contain other things like constants and so on. Ok, now do the installing of the modules,

type the following in your terminal: pip install requests requests-cache bs4

gename and they shall be installed after some time.

Success

Ok, try to run the program again by typing: python scrape_gu.py in your

terminal. This should print something like this:

{'Forskare, biträdande': {'F': 1, 'M': 0, 'U': 0}, 'Professor, UNESCO-professor i yttrandefrihet, medieutveckling och global politik': {'F': 1, 'M': 0, 'U': 0}, 'Datadriftledare': {'F': 0, 'M': 1, 'U': 0}, 'Administrativ chef, inst': {'F': 0, 'M': 1, 'U': 0}, 'Professor, Viceprefekt': {'F': 0, 'M': 1, 'U': 0}, 'Professor, Proprefekt': {'F': 1, 'M': 0, 'U': 0}, 'Universitetslektor, Docent': {'F': 1, 'M': 1, 'U': 0}, 'Universitetslektor': {'F': 4, 'M': 5, 'U': 1}, 'Personalhandläggare': {'F': 1, 'M': 0, 'U': 0}, 'Gästlärare': {'F': 0, 'M': 1, 'U': 0}, 'Universitetsadjunkt': {'F': 11, 'M': 5, 'U': 0}, 'Doktorand': {'F': 7, 'M': 6, 'U': 0}, 'Studieadministratör': {'F': 1, 'M': 1, 'U': 0}, 'Professor': {'F': 2, 'M': 2, 'U': 0}, 'Kommunikatör': {'F': 1, 'M': 0, 'U': 0}, 'Universitetslektor, Prefekt': {'F': 1, 'M': 0, 'U': 0}, 'Gästforskare': {'F': 1, 'M': 0, 'U': 0}, 'Universitetslektor, Studierektor': {'F': 1, 'M': 0, 'U': 0}}So we have a list of titles and from each title we can read how many women and

men that have that title. We could do some improvements to this program since

some really hard working academics have more than one title. Also we can see

that there is an 'U' for unknown gender, maybe this is true but there could

be something we can improve when parsing the first names.

Did we learn anything?

First you should step through what this program actually did, try to read the program comments and when you are done you will be ready for the next level, Part 3 of Scraping 101